Recently I wrote a blog post about the action language adopted and standardized by the OMG (Object Management Group) called Action Language for fUML or Alf where I alluded about something that I call “model driven workflow” (MDW) — a modeling workflow that embraces modeling across the whole software development lifecycle integrating design, development and testing in an unparalled way.

Let’s start by looking at what this means in terms of integrating testing with design. Traditionally this works so that a design organization comes up with a specification of the system operation (that is the design of the system to be implemented and tested) which then the testing organization is supposed to interpret and manually turn into a test suite by doing fully manual test design and test implementation. This is a big can of worms. It is a process that is time-consuming, tedious, and error-prone, often resulting in missed delivery schedules, budget slippages and poor product quality. Now in order to address this, we use MDW to provide a powerful TDD (Test Driven Development) approach where test cases can be created before the implementation is ready. Here the testing organization leverages the assets created by the design organization directly as-is through system model driven MBT. Why I say “system model driven MBT” is because the technology must meet many requirements that a typical MBT tool does not fulfill. The most important of these requirements is clearly that the technology must be able to create test cases — or more precisely full test oracles with the input needed to stimulate the system under test with the exact expected output both containing full data including timing related aspects—from those design assets in a scalable manner. Something that only a technology such as Conformiq provides is capable of delivering.

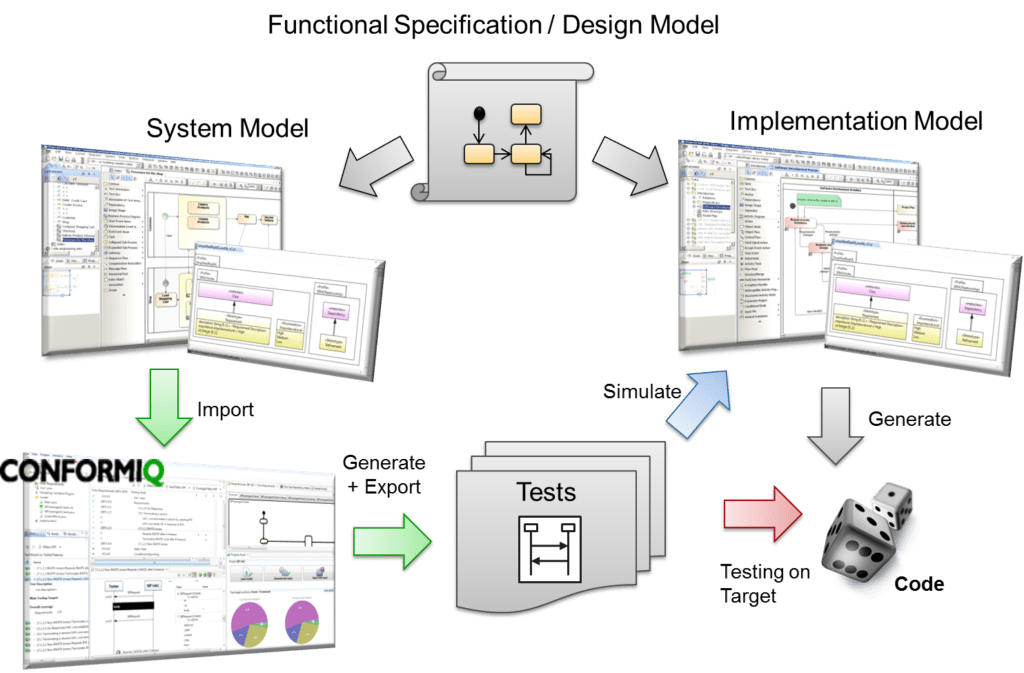

Take a look at the above figure. What happens here is that first the design organization creates a design model of the system that we often call a “functional specification” or a “specification / design model”. Both test and development organizations inherit this model therefore getting a jump start by not needing to start their work from the scratch.

The test organization then takes this inherited model and enriches it with testing related details. With Conformiq, the testers do not manually design the test cases but they use the Conformiq tool to do that. Therefore, instead of writing test scripts or even test models manually, they actually take the specification as-is and annotate it with some testing related details and let the Conformiq test generation platform figure out all the needed test cases. The developers on the other hand add the implementation details to the model. As you see, both developers and testers are working with the same common base, the base that defines the high-level operation of the system being developed. Still these models are different and independent while they are related. This is really crucial as only using independent models for testing and development enables independent verification of the functional requirements due to independence of the models, while at the same the use of related models enables cost reductions because data types and high level model structures do not need to be created and maintained twice and communicated between the test and the development teams.

It’s also good to note that both of the models are executable. This enables testers to directly generate test cases out of the “system model” using Conformiq tooling while the developers can generate code from their “implementation models”. In order to enable early verification and round-tripping, the Conformiq tool can generate test cases that can be imported by the developers into their modeling environment for model simulation. This way we can immediately identify potential discrepancies between the expected and observed behavior without even having the target system being available and ready. Ultimately this means that whenever we then start to test on the target itself (again using test cases generated directly by Conformiq using the very same “system model” even at the same time as the simulation test cases were created) we have a pretty good idea about the quality of the system and what to expect as a result from the test run.

The above figure and description may give you an impression that the whole MDW works only with the waterfall development model but this is not the case. Certainly it works very well with waterfall, but I argue that it works even better with agile. The reason for this is that whenever there is a change in the requirements—something that is expected and even embraced in agile development methods—it is easier to reflect that change in the model and the amount of work is actually linear compared with the requirement change. After the model has been updated, new test suite can be automatically generated. Traditionally when requirements change, a significant amount of effort is required to analyze and update existing test suites. It is necessary to go through every test case and see whether or not the test case and the associated data are still valid, whether they should be modified in some way, or whether they should be eliminated altogether. In addition, it is necessary to decide if new tests for bridging the coverage gap need to be introduced or not and with what kind of test cases.

In summary the model driven workflow therefore enables:

- More reuse

- Increased productivity

- Shorter turnaround time

- Improved quality

- Reduced maintenance effort and cost