OK I admit that this is a bit farfetched. I don’t ever recall a test manager whose biggest concern would have been how to efficiently solve and test Sudokus. I was simply in a mood of having some fun and what would be better than working with a Sudoku problem. I bet that you have firsthand experience on solving those and just like me, you have spent a good deal of your air miles cracking those darn puzzles. Well at least I have, so bear with me.

Instead of just opening up the last page of the local newspaper, I decided to create a Conformiq model that would solve my Sudoku problem. Probably not the most common use case of our technology but, like I said, I wanted to have some fun. What I did was that I created a Conformiq model that simply describes the rules of Sudoku. This is what we do with Conformiq technology; we describe the problem using rather abstract terms and let the technology solve the problem for us instead forcing us to do the hard mental work. In the case of classic Sudoku, the rules are pretty darn simple.

[well]Classic Sudoku involves a grid of 81 squares divided into 9 blocks each containing 9 squares. Each of those 9 squares need to contain numbers from 1 to 9 and each number can only appear once in a row, column, and each 9 item square.[/well]

The real problem is that, while the rules are simple and they are quick and easy to describe, the whole logic with all the combinatorial complexity makes solving Sudokus such a challenge. But if you think of it, this same thing applies to pretty much every non-trivial piece of logic out there: while the logic itself is quite simple, it lends itself to hugely complex scenarios. And this is where an efficient algorithm comes in. An algorithm is so much better in solving these combinatorial problems than our brains. Yes, our brains are arguably the most complex, elegant, and intriguing structure in the whole universe, but a human brain is just horrible in solving combinatorial problems. Compared to a computer, we really suck in solving combinatorial puzzles. We suck big time. So realizing that I’m no match for a computer, I simply gave my model with those simple rules to our Conformiq test generation engine and in no time I got my answer. It automatically generated the data needed to solve the rules to test the model.

This brings me to the task of test design. How would you test the logic of a Sudoku application? You probably would have to solve multiple puzzles and verify the correctness of the results. But how would you do the design of such puzzle tests? Sure in the case of Sudoku you might say that the world is full of Sudoku puzzles and you would quickly find a set of examples to use. But what if you would not have that luxury? What if your Sudoku problem would be that Greenfield application that you are supposed to test? How would you go about then? Uh, that’s quite a pickle I would argue. However the reality is that testers and test managers face this situation each and every day. In the case of Sudoku I did what we humans are good at and described the problem, but then instead of trying to solve the problem myself, I outsourced the whole process of solving the problem, i.e. the process of test design, to a computer simply because it is so much better at it than I am. I was in a position to do so because I simply had proper tools available. I didn’t need to bother going through that hugely complicated, time consuming, and error prone process of solving the puzzle in my head and instead I merely described the rules of Sudoku and I handed over the real work to a computer.

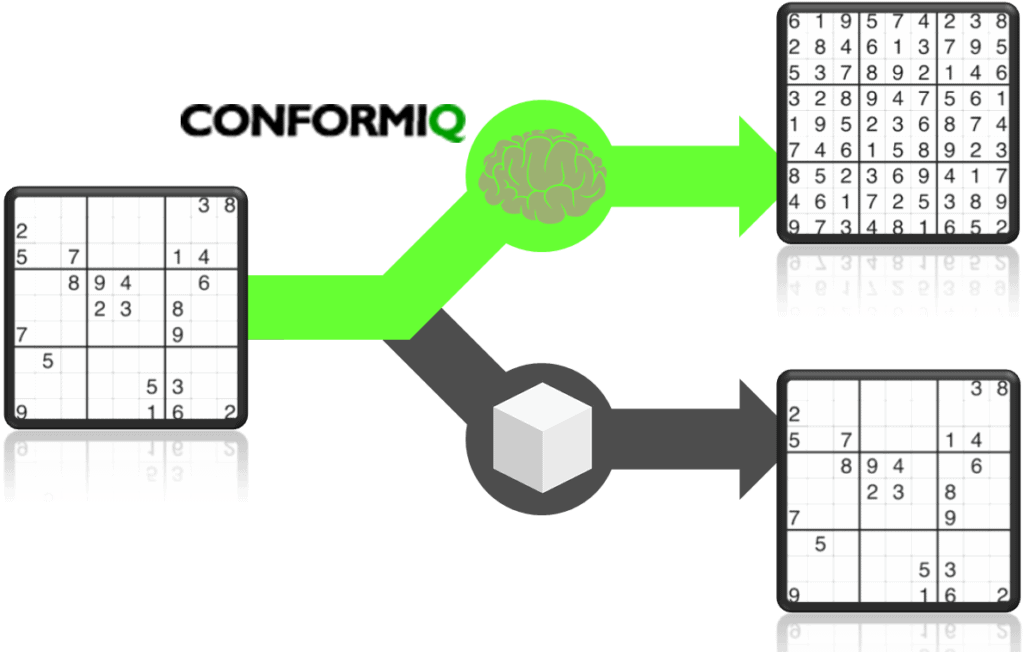

That little difference. While Conformiq automates also the test data design and that way can automatically solve the Sudoku problem, a simpler solution leaves the problem solving entirely up to the user.

This Sudoku example, albeit something that as such we don’t need to deal with in our daily work lives, quite nicely demonstrates the fundamental difference between our Conformiq technology and manual test approaches and even with our competitor’s tools. Indeed model based testing solutions today that claim to automate the test design fall short here. Yes, on the surface level, the tools all look more or less the same. We all have boxes and arrows and by a click of a button you get tests. The reality however is that our competitors only solve the design of test flows (i.e., they provide the elementary machinery for iterating over the model paths) while test data design is left outside. Often the lack of this crucial functionality is hidden by all kinds of stories around great integrations with test data management tools and more while the real deal is that the vast majority of your test design, even after deploying these tools, still needs to be carried out manually. These tools wouldn’t help you a bit here. In the case of Sudoku this would mean that you would still need to manually solve the entire puzzle yourself even after having your test design “automated”. What…? Yes, you are left with solving the whole puzzle all by yourself. So much for automation…

I do realize that most of you test managers out there do not lose sleep over a game of Sudoku, but I hope that this example highlights the need of not only automating the design of test flows but also the test data. If test data design is left outside or decoupled from the model logic you are bound to suffer from unproductive, error prone, and limited testing efforts.

So maybe next time when you are in a position to affect your test automation tooling you should ask, “How would this solution help me in solving a Sudoku?” And me? Well, I got to have great fun with Sudoku modeling!